bagging predictors. machine learning

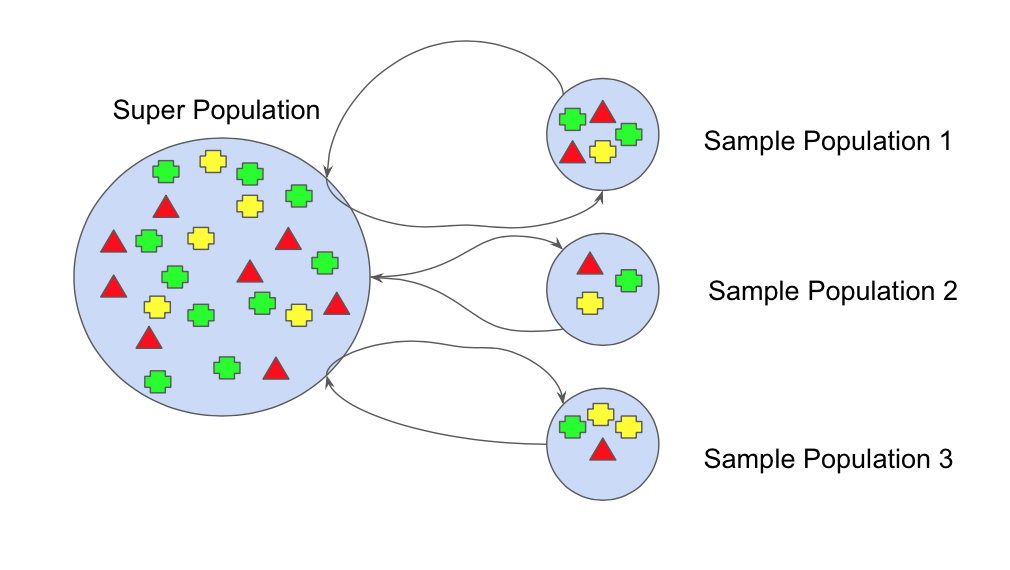

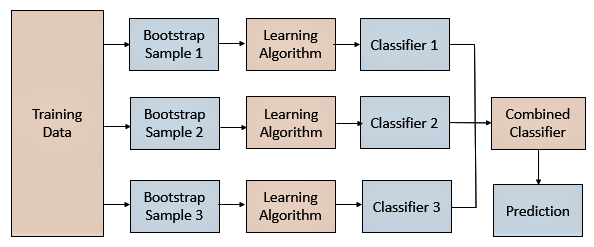

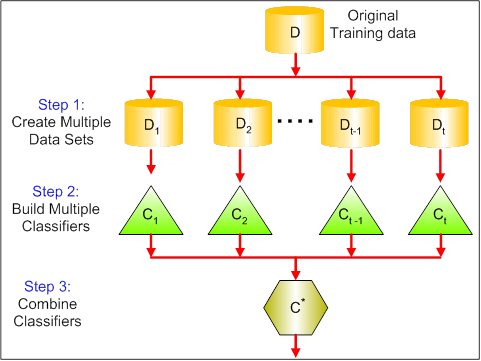

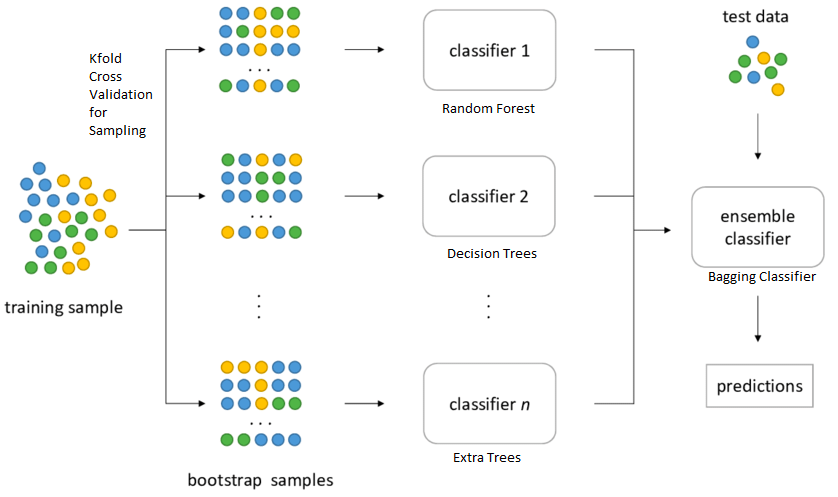

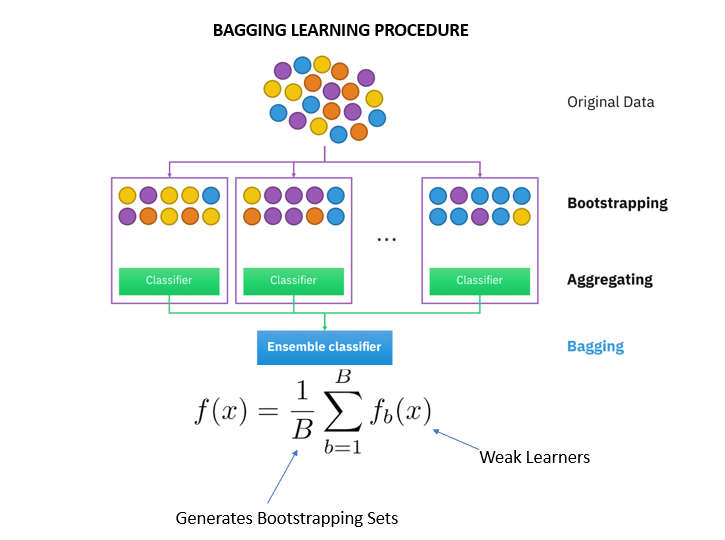

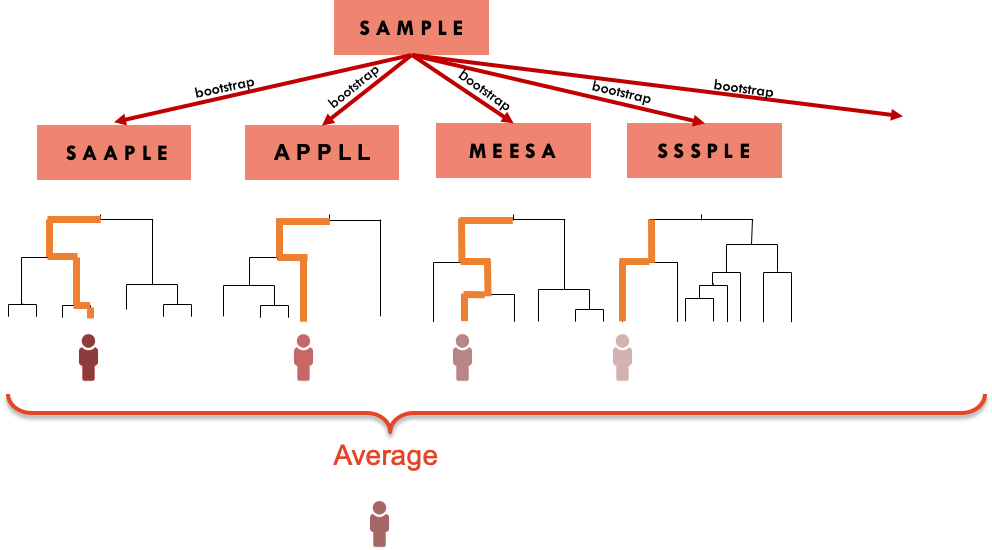

In Section 242 we learned about bootstrapping as a resampling procedure which creates b new bootstrap samples by drawing samples with replacement of the original training data. Aggregation in Bagging refers to a technique that combines all possible outcomes of the prediction and randomizes the outcome.

Machine Learning What Is The Difference Between Bagging And Random Forest If Only One Explanatory Variable Is Used Cross Validated

Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor.

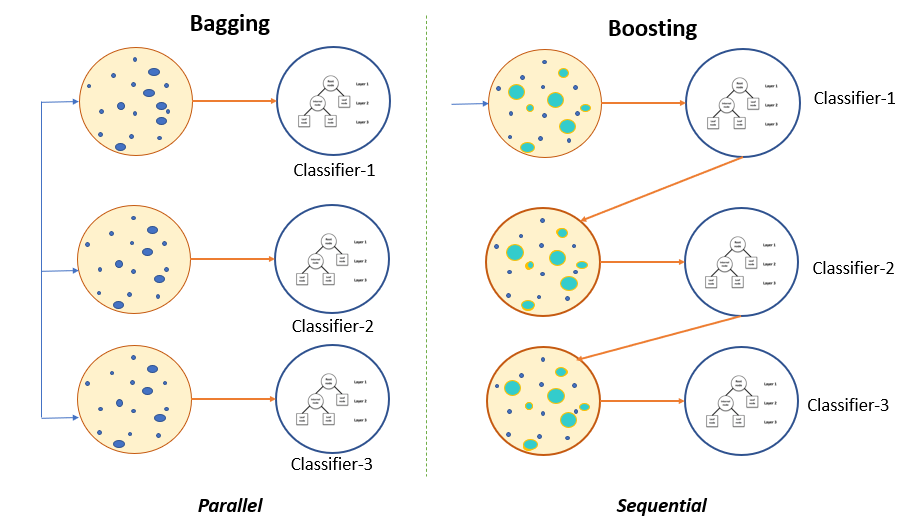

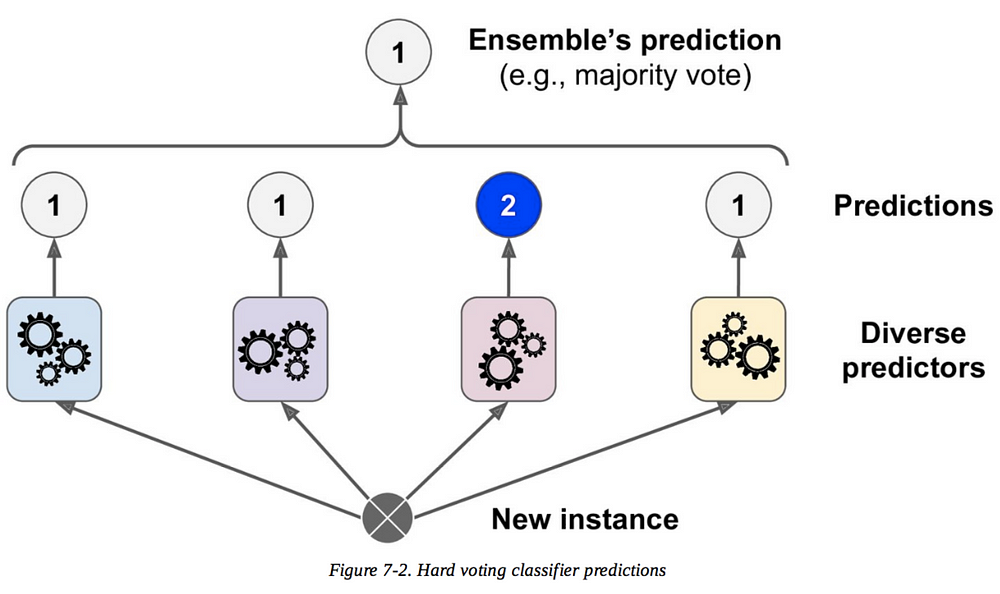

. The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Affecting up to 15 of neonates born weighing less than 1500 g NEC causes sudden-onset progressive intestinal inflammation and necrosis which can lead to significant bowel loss multi-organ injury or death. Bagging is a Parallel ensemble method where every model is constructed independently.

Size of the data set for each predictor is 4. It also reduces variance and helps to avoid overfitting. This means that bagging is effective in reducing the prediction errors.

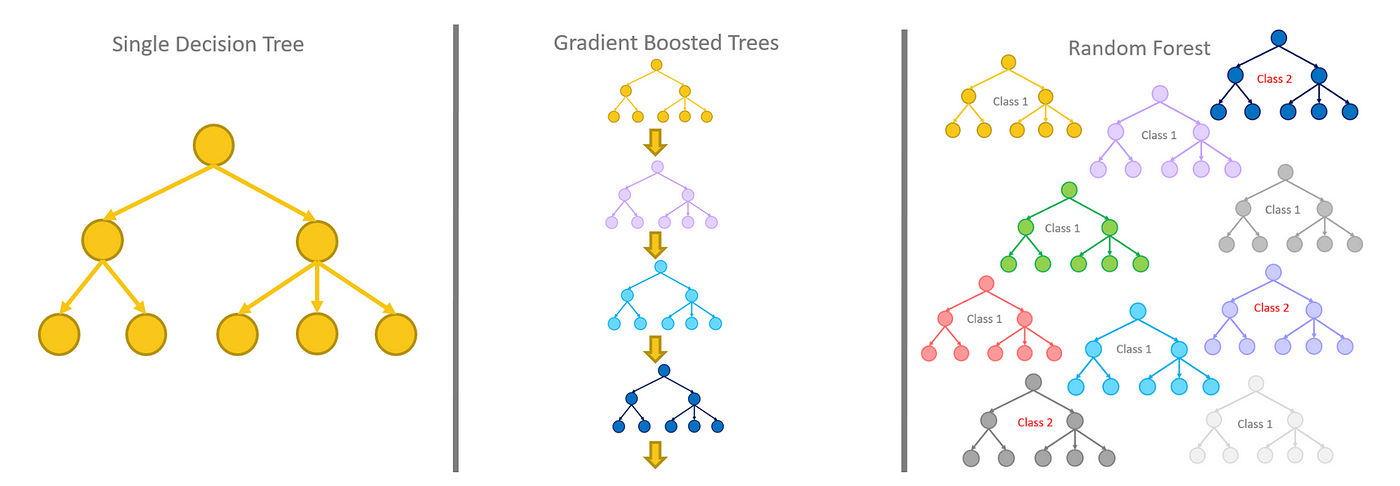

Comparison of Single and Ensemble Classifiers of Support Vector Machine and Classification Tree. The diversity of the members of an ensemble is known to be an important factor in determining its generalization error. By use of numerical prediction the mean square error of the aggregated predictor Ф A x is much lower than the mean square error averaged over the learning set L.

The multiple versions are formed by making bootstrap replicates of the learning set and. Cited by 54 654year BREIMAN L 1998. Bootstrap aggregating also called bagging from b ootstrap agg regat ing is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regression.

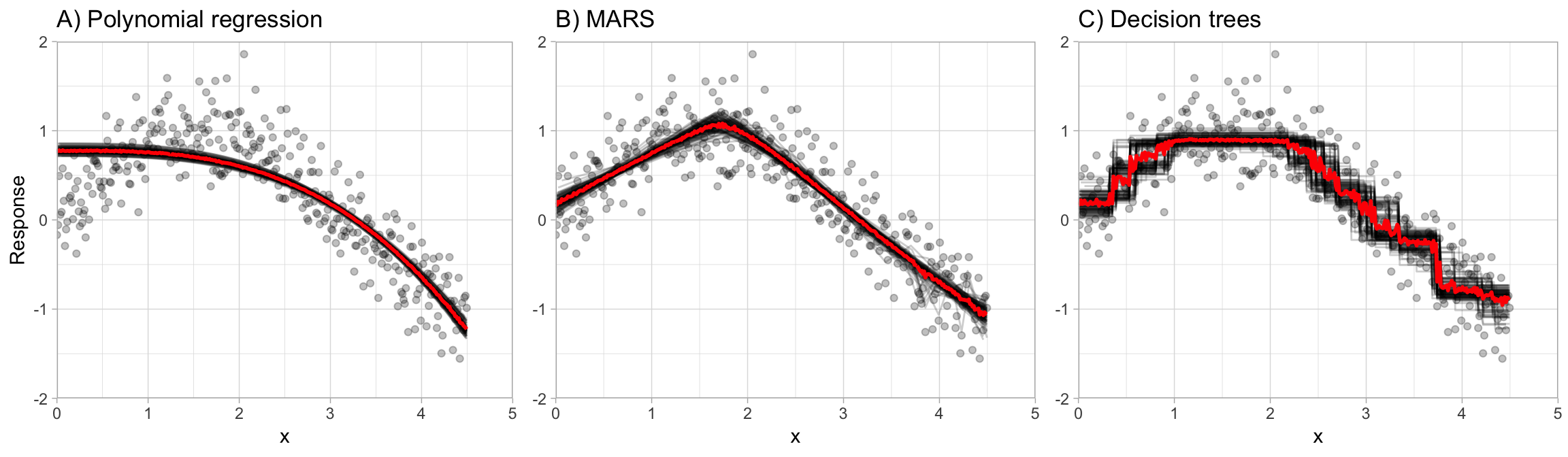

Bagging is effective in reducing the prediction errors when the single predictor ψ x L is highly variable. We see that both the Bagged and Subagged predictor outperform a single tree in terms of MSPE. This chapter illustrates how we can use bootstrapping to create an ensemble of predictions.

Lymph Diseases Prediction Using Random Forest and Particle Swarm Optimization. Cited by 15 182year BREIMAN L 2001. Manufactured in The Netherlands.

Berkele CA 94720 leostatberkeleyedu Editor. Machine Learning 24 123140 1996. 2 days agoNecrotizing enterocolitis NEC is a common potentially catastrophic intestinal disease among very low birthweight premature infants.

The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. This paper proposes a churn prediction model based on. Ensemble methods like bagging and boosting that combine the decisions of multiple hypotheses are some of the strongest existing machine learning methods.

For a subsampling fraction of approximately 05 Subagging achieves nearly the same prediction performance as Bagging while coming at a lower computational cost. Has been cited by the following article. Research on customer churn prediction using AI technology is now a major part of e-commerce management.

Hence many weak models are combined to form a better model. Iut Tri Utami 1 Bagus Sartono 2 Kusman Sadik 2. Bagging and boosting cs 2750 machine learning administrative announcements term projects.

Using Iterated Bagging to Debias Regressions. Machine Learning 24 123-140. Has been cited by the following article.

Improve the final models using another set of optimization algorithms which include boosting and bagging techniques comprehend the theoretical concepts and how they relate to the practical aspects of machine learning target audience. Cited by 2460 23989year BREIMAN L 1998. Up to 10 cash back Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor.

In the above example training set has 7 samples. Visual showing how training instances are sampled for a predictor in bagging ensemble learning. HALFHALF BAGGING AND HARD BOUNDARY POINTS.

Bootstrap aggregating also called bagging is one of the first ensemble algorithms. This paper presents a new Abstract - Add to MetaCart. Bagging Predictors LEO BBEIMAN Statistics Department University qf Callbrnia.

The results of repeated tenfold cross-validation experiments for predicting the QLS and GAF functional outcome of schizophrenia with clinical symptom scales using machine learning predictors such as the bagging ensemble model with feature selection the bagging ensemble model MFNNs SVM linear regression and random forests. Breiman L Bagging Predictors Machine Learning 24. Machine Learning 24 123140 1996 c 1996 Kluwer Academic Publishers Boston.

In customer relationship management it is important for e-commerce businesses to attract new customers and retain existing ones. Bagging is used when the aim is to reduce variance. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor.

Bagging Predictors LEO BREIMAN leostatberkeleyedu Statistics Department University of California Berkeley CA 94720. Bankruptcy Prediction Using Machine Learning Nanxi Wang Journal of Mathematical Finance Vol7 No4 November 17 2017.

Bagging Classifier Instead Of Running Various Models On A By Pedro Meira Time To Work Medium

Bootstrap Aggregating Wikipedia

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Bagging Bootstrap Aggregation Overview How It Works Advantages

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

Tree Based Algorithms Implementation In Python R

Ensemble Models Bagging Boosting By Rosaria Silipo Analytics Vidhya Medium

Bagging Classifier Python Code Example Data Analytics

Ensemble Methods In Machine Learning Bagging Subagging

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

An Introduction To Bagging In Machine Learning Statology

Ensemble Learning 5 Main Approaches Kdnuggets

2 Bagging Machine Learning For Biostatistics

What Is Bagging In Machine Learning And How To Perform Bagging

Pdf Bagging Predictors Semantic Scholar

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium